About the CoMSES Model Library more info

Our mission is to help computational modelers develop, document, and share their computational models in accordance with community standards and good open science and software engineering practices. Model authors can publish their model source code in the Computational Model Library with narrative documentation as well as metadata that supports open science and emerging norms that facilitate software citation, computational reproducibility / frictionless reuse, and interoperability. Model authors can also request private peer review of their computational models. Models that pass peer review receive a DOI once published.

All users of models published in the library must cite model authors when they use and benefit from their code.

Please check out our model publishing tutorial and feel free to contact us if you have any questions or concerns about publishing your model(s) in the Computational Model Library.

We also maintain a curated database of over 7500 publications of agent-based and individual based models with detailed metadata on availability of code and bibliometric information on the landscape of ABM/IBM publications that we welcome you to explore.

Displaying 10 of 136 results for "José I Santos" clear search

Maze with Q-Learning NetLogo extension

Kevin Kons Fernando Santos | Published Tuesday, December 10, 2019This is a re-implementation of a the NetLogo model Maze (ROOP, 2006).

This re-implementation makes use of the Q-Learning NetLogo Extension to implement the Q-Learning, which is done only with NetLogo native code in the original implementation.

Cliff Walking with Q-Learning NetLogo Extension

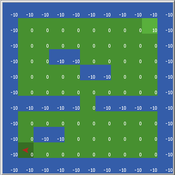

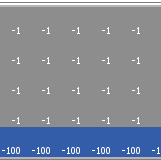

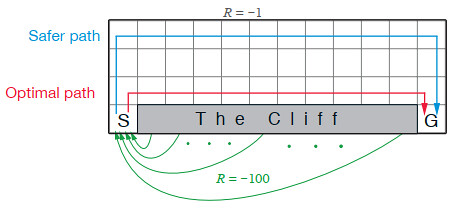

Kevin Kons Fernando Santos | Published Tuesday, December 10, 2019This model implements a classic scenario used in Reinforcement Learning problem, the “Cliff Walking Problem”. Consider the gridworld shown below (SUTTON; BARTO, 2018). This is a standard undiscounted, episodic task, with start and goal states, and the usual actions causing movement up, down, right, and left. Reward is -1 on all transitions except those into the region marked “The Cliff.” Stepping into this region incurs a reward of -100 and sends the agent instantly back to the start (SUTTON; BARTO, 2018).

The problem is solved in this model using the Q-Learning algorithm. The algorithm is implemented with the support of the NetLogo Q-Learning Extension

MarPEM: An Agent Based Model to Explore the Effects of Policy Instruments on the Transition of the Maritime Fuel System

G Bas I Nikolic K De Boo Am Vaes - Van De Hulsbeek | Published Thursday, June 15, 2017MarPEM is an agent-based model that can be used to study the effects of policy instruments on the transition away from HFO.

A preliminary extension of the Hemelrijk 1996 model of reciprocal behavior to include feeding

Sean Barton | Published Monday, December 13, 2010 | Last modified Saturday, April 27, 2013A more complete description of the model can be found in Appendix I as an ODD protocol. This model is an expansion of the Hemelrijk (1996) that was expanded to include a simple food seeking behavior.

ADAM: Agent-based Demand and Assignment Model

D Levinson | Published Monday, August 29, 2011 | Last modified Saturday, April 27, 2013The core algorithm is an agent-based model, which simulates travel patterns on a network based on microscopic decision-making by each traveler.

Walk Away in groups

Athena Aktipis | Published Thursday, March 17, 2016This NetLogo model implements the Walk Away strategy in a spatial public goods game, where individuals have the ability to leave groups with insufficient levels of cooperation.

Peer reviewed NoD-Neg: A Non-Deterministic model of affordable housing Negotiations

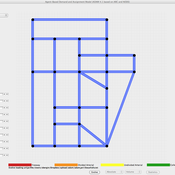

Aya Badawy Nuno Pinto Richard Kingston | Published Sunday, September 08, 2024The Non-Deterministic model of affordable housing Negotiations (NoD-Neg) is designed for generating hypotheses about the possible outcomes of negotiating affordable housing obligations in new developments in England. By outcomes we mean, the probabilities of failing the negotiation and/or the different possibilities of agreement.

The model focuses on two negotiations which are key in the provision of affordable housing. The first is between a developer (DEV) who is submitting a planning application for approval and the relevant Local Planning Authority (LPA) who is responsible for reviewing the application and enforcing the affordable housing obligations. The second negotiation is between the developer and a Registered Social Landlord (RSL) who buys the affordable units from the developer and rents them out. They can negotiate the price of selling the affordable units to the RSL.

The model runs the two negotiations on the same development project several times to enable agents representing stakeholders to apply different negotiation tactics (different agendas and concession-making tactics), hence, explore the different possibilities of outcomes.

The model produces three types of outputs: (i) histograms showing the distribution of the negotiation outcomes in all the simulation runs and the probability of each outcome; (ii) a data file with the exact values shown in the histograms; and (iii) a conversation log detailing the exchange of messages between agents in each simulation run.

Replicating the Macy & Sato Model: Trust, Cooperation and Market Formation in the U.S. and Japan

Oliver Will | Published Saturday, August 29, 2009 | Last modified Saturday, April 27, 2013A replication of the model “Trust, Cooperation and Market Formation in the U.S. and Japan” by Michael W. Macy and Yoshimichi Sato.

Expectation-Based Bayesian Belief Revision

C Merdes Momme Von Sydow Ulrike Hahn | Published Monday, June 19, 2017 | Last modified Monday, August 06, 2018This model implements a Bayesian belief revision model that contrasts an ideal agent in possesion of true likelihoods, an agent using a fixed estimate of trusting its source of information, and an agent updating its trust estimate.

Correlated random walk (Javascript)

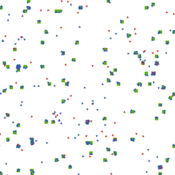

Viktoriia Radchuk Uta Berger Thibault Fronville | Published Tuesday, May 09, 2023The first simple movement models used unbiased and uncorrelated random walks (RW). In such models of movement, the direction of the movement is totally independent of the previous movement direction. In other words, at each time step the direction, in which an individual is moving is completely random. This process is referred to as a Brownian motion.

On the other hand, in correlated random walks (CRW) the choice of the movement directions depends on the direction of the previous movement. At each time step, the movement direction has a tendency to point in the same direction as the previous one. This movement model fits well observational movement data for many animal species.

The presented agent based model simulated the movement of the agents as a correlated random walk (CRW). The turning angle at each time step follows the Von Mises distribution with a ϰ of 10. The closer ϰ gets to zero, the closer the Von Mises distribution becomes uniform. The larger ϰ gets, the more the Von Mises distribution approaches a normal distribution concentrated around the mean (0°).

In this script the turning angles (following the Von Mises distribution) are generated based on the the instructions from N. I. Fisher 2011.

This model is implemented in Javascript and can be used as a building block for more complex agent based models that would rely on describing the movement of individuals with CRW.

Displaying 10 of 136 results for "José I Santos" clear search