About the CoMSES Model Library more info

Our mission is to help computational modelers develop, document, and share their computational models in accordance with community standards and good open science and software engineering practices. Model authors can publish their model source code in the Computational Model Library with narrative documentation as well as metadata that supports open science and emerging norms that facilitate software citation, computational reproducibility / frictionless reuse, and interoperability. Model authors can also request private peer review of their computational models. Models that pass peer review receive a DOI once published.

All users of models published in the library must cite model authors when they use and benefit from their code.

Please check out our model publishing tutorial and feel free to contact us if you have any questions or concerns about publishing your model(s) in the Computational Model Library.

We also maintain a curated database of over 7500 publications of agent-based and individual based models with detailed metadata on availability of code and bibliometric information on the landscape of ABM/IBM publications that we welcome you to explore.

Displaying 10 of 811 results for "Momme Von Sydow" clear search

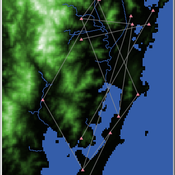

Shellmound Trade

Henrique de Sena Kozlowski | Published Saturday, June 15, 2024This model simulates different trade dynamics in shellmound (sambaqui) builder communities in coastal Southern Brazil. It features two simulation scenarios, one in which every site is the same and another one testing different rates of cooperation. The purpose of the model is to analyze the networks created by the trade dynamics and explore the different ways in which sambaqui communities were articulated in the past.

How it Works?

There are a few rules operating in this model. In either mode of simulation, each tick the agents will produce an amount of resources based on the suitability of the patches inside their occupation-radius, after that the procedures depend on the trade dynamic selected. For BRN? the agents will then repay their owed resources, update their reputation value and then trade again if they need to. For GRN? the agents will just trade with a connected agent if they need to. After that the agents will then consume a random amount of resources that they own and based on that they will grow (split) into a new site or be removed from the simulation. The simulation runs for 1000 ticks. Each patch correspond to a 300x300m square of land in the southern coast of Santa Catarina State in Brazil. Each agent represents a shellmound (sambaqui) builder community. The data for the world were made from a SRTM raster image (1 arc-second) in ArcMap. The sites can be exported into a shapefile (.shp) vector to display in ArcMap. It uses a UTM Sirgas 2000 22S projection system.

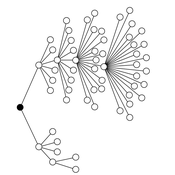

Peer reviewed Casting: A Bio-Inspired Method for Restructuring Machine Learning Ensembles

Colin Lynch Bryan Daniels | Published Thursday, September 18, 2025The wisdom of the crowd refers to the phenomenon in which a group of individuals, each making independent decisions, can collectively arrive at highly accurate solutions—often more accurate than any individual within the group. This principle relies heavily on independence: if individual opinions are unbiased and uncorrelated, their errors tend to cancel out when averaged, reducing overall bias. However, in real-world social networks, individuals are often influenced by their neighbors, introducing correlations between decisions. Such social influence can amplify biases, disrupting the benefits of independent voting. This trade-off between independence and interdependence has striking parallels to ensemble learning methods in machine learning. Bagging (bootstrap aggregating) improves classification performance by combining independently trained weak learners, reducing bias. Boosting, on the other hand, explicitly introduces sequential dependence among learners, where each learner focuses on correcting the errors of its predecessors. This process can reinforce biases present in the data even if it reduces variance. Here, we introduce a new meta-algorithm, casting, which captures this biological and computational trade-off. Casting forms partially connected groups (“castes”) of weak learners that are internally linked through boosting, while the castes themselves remain independent and are aggregated using bagging. This creates a continuum between full independence (i.e., bagging) and full dependence (i.e., boosting). This method allows for the testing of model capabilities across values of the hyperparameter which controls connectedness. We specifically investigate classification tasks, but the method can be used for regression tasks as well. Ultimately, casting can provide insights for how real systems contend with classification problems.

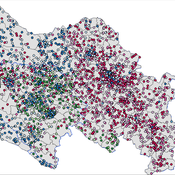

Location Analysis Hybrid ABM

Lukasz Kowalski | Published Friday, February 08, 2019The purpose of this hybrid ABM is to answer the question: where is the best place for a new swimming pool in a region of Krakow (in Poland)?

The model is well described in ODD protocol, that can be found in the end of my article published in JASSS journal (available online: http://jasss.soc.surrey.ac.uk/22/1/1.html ). Comparison of this kind of models with spatial interaction ones, is presented in the article. Before developing the model for different purposes, area of interest or services, I recommend reading ODD protocol and the article.

I published two films on YouTube that present the model: https://www.youtube.com/watch?v=iFWG2Xv20Ss , https://www.youtube.com/watch?v=tDTtcscyTdI&t=1s

…

Evolution of altruistic punishment

Marco Janssen | Published Wednesday, September 03, 2008 | Last modified Saturday, March 09, 2019In the model agents make decisions to contribute of not to the public good of a group, and cooperators may punish, at a cost, defectors. The model is based on group selection, and is used to understan

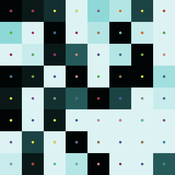

Spatiotemporal Visualization of Emotional and Emotional-related Mental States

Luis Macedo | Published Monday, November 07, 2011 | Last modified Saturday, April 27, 2013A system that receives from an agent-based social simulation the agent’s emotional data, their emotional-related data such as motivations and beliefs, as well as their location, and visualizes of all this information in a two dimensional map of the geographic region the agents inhabit as well as on graphs along the time dimension.

This is the final version of the model. To simulate the normative dynamics we used the EmIL (EMergence In the Loop) Framework which was kindly provided by Ulf Lotzmann. http://cfpm.org/EMIL-D5.1.pdf

Peak-seeking Adder

Julia Kasmire Janne M Korhonen | Published Tuesday, December 02, 2014 | Last modified Friday, February 20, 2015Continuing on from the Adder model, this adaptation explores how rationality, learning and uncertainty influence the exploration of complex landscapes representing technological evolution.

Peer reviewed Mission Cattle

Isaac Ullah | Published Monday, December 15, 2025The model examines cattle herd dynamics on a patchy grassland subject to two exogenous pressures: periodic raiding events that remove animals and scheduled management culling that can target males and/or females. It is intended for comparative experiments on how raiding frequency, culling schedules, vegetation dynamics, and life-history parameters interact to shape herd persistence. The model was specifically designed to test the scenario of cattle herding in the arid grasslands of southern Arizona and northern Sonora during the mission period (late 17th through late 18th centuries, CE). In this period, herds were locally managed by Spanish mission personnel and local O’odham groups. Herds were culled mostly for local consumption of meat, hides, and tallow, but the mission herds were often targets for raiding by neighboring groups. The main purpose of the model is to examine herd dynamics in a seasonally variable, arid environment where herds are subject to both intentional internal harvest (culling) and external harvest (raiding).

A Model to Unravel the Complexity of Rural Food Security

Stefano Balbi Samantha Dobbie | Published Monday, August 22, 2016 | Last modified Sunday, December 02, 2018An ABM to simulate the behaviour of households within a village and observe the emerging properties of the system in terms of food security. The model quantifies food availability, access, utilisation and stability.

Mesoscopic Effects in an Agent-Based Bargaining Model in Regular Lattices

David Poza José Manuel Galán Ordax José Santos Adolfo López-Paredes | Published Thursday, February 02, 2017 | Last modified Wednesday, April 25, 2018We propose an agent-based model where a fixed finite population of tagged agents play iteratively the Nash demand game in a regular lattice. The model extends the bargaining model by Axtell, Epstein and Young.

Displaying 10 of 811 results for "Momme Von Sydow" clear search